What is the EU AI Act?

The European Union Artificial Intelligence Act (‘AI Act’) is the world’s first comprehensive regulation that governs the use of AI in the European Union. The European Commission proposed it in April 2021, then passed and came into effect on 1 August 2024.

The AI Act acts as a guide for the development, introduction to the market, commissioning and use of AI. This is to encourage the adoption of human-centric and trustworthy AI by ensuring that the health, safety and rights of EU citizens are protected. The AI systems are classified based on the type of risks they pose.

The Parliament’s purpose for introducing the AI Act is to make AI systems in the EU safe, transparent, traceable, non-discriminatory and environmentally friendly. It also establishes obligations for AI developers and users depending on the level of risks it poses.

In February 2024, the European AI Office was established to oversee the AI Act’s enforcement and implementation in the EU Member States. The AI Office will be responsible for supervising powerful AI models (general-purpose AI models).

The AI Act is guided by the European Artificial Intelligence Board, Scientific Panel (independent AI experts) and Advisory Forum (commercial and non-commercial stakeholders).

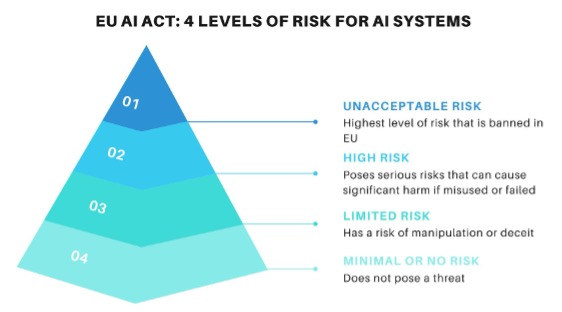

Levels Of Risk Under The EU AI Act

The AI Act categorizes AI systems into four risk levels based on their potential impact.

Unacceptable Risk

This is the highest level of risk as it can pose serious threats to the health, safety or fundamental rights. This also takes into account the severity of the possible harm and probability of occurrence. The AI Act prohibits social scoring systems, real-time biometrics identification and systems that can manipulate behavior and decisions. AI systems that pose this type of risk are banned.

High Risk

AI systems that pose a high risk mean that the risk can affect the safety or fundamental rights of a person. These AI systems will be assessed before being introduced into the market and throughout its life cycle.

According to the AI Act, an AI system does not pose a high risk if it does not influence decision-making or harm the legal interest of a person. To determine whether the AI system does not influence the outcome of a decision-making, the following conditions will be considered:

1. Narrow Procedural Tasks

The AI system is intended to only perform a narrow procedural task. The tasks performed by this type of system pose limited risks.

2. Improve Human Work

The task performed by the system is used to improve the result of a human’s work. For instance, improving the language used in a document.

3. Detect Patterns

The AI system is used to only detect decision-making patterns from previous decision-making matters. The risk in this system is low because it will rely on previously completed human work which will not replace or influence them.

4. Preparatory Tasks

The AI system will only prepare preliminary tasks. The risk will be very low because its output will have minimal impact.

Limited Risk

AI systems, such as chatbots, that pose limited risks will need to be transparent on the use of the system. The AI Act imposes disclosure obligations so that human users that are interacting with the AI system can exercise precaution.

Minimal or No Risk

The AI Act does not focus on AI systems that pose little to no risk. AI systems that fall in this category would include AI-enabled video games and spam filters.

Roles under the EU AI Act

The AI Act applies to providers, deployers, importers and distributors of AI systems. It applies to these parties if they market an AI system, serve AI system users or utilize the output of the AI system, regardless of their location.

It is considered a high risk when an operator based in EU contracts certain services to be performed by an AI system, to an operator in a third country. To protect the interests of the EU citizens, the AI Act applies to providers and deployers of AI systems established in a third country if the output produced by the AI system is intended to be used in the EU.

Below are the obligations of each role under the AI Act for high-risk AI systems:

Providers

(including those who have developed and plan to introduce it in the market or provide services under its name)

Obligations of providers of high-risk AI systems include:

- Have a quality management system to ensure compliance with the AI Act

- Ensure the high-risk AI system is compliant with the requirements of the AI Act

- Indicate on the AI system or packaging or accompanying documents, the name, registered trade name or registered trademark and address of the provider

- Have a quality management system

- Keep the documentation

- Keep the logs automatically generated by the high-risk AI systems

- Ensure that the high-risk AI system undergoes the relevant conformity assessment procedure before it is introduced in the market or put into service

- Create an EU declaration of conformity

- Place the CE marking to the high-risk AI system or on its packaging or documentation

- Comply with registration obligations

- Take the necessary actions and provide the information required

- Demonstrate the conformity of the high-risk AI system with the AI Act’s requirements

- Ensure that the high-risk AI system complies with accessibility requirements

Deployers

The deployers must:

- Take appropriate technical and organizational measures to ensure that the high-risk AI systems they are using are based on the instructions of use.

- Monitor the functioning of the AI systems and have record-keeping measures.

- Ensure that the people who are responsible for installing and monitoring the AI system have adequate AI literacy, training and authority to do so.

- Prepare risk management strategies

Importers

The importers must ensure that the AI system conforms with the AI Act by verifying that:

- The conformity assessment procedure outlined in the AI Act is carried out by the provider of the high-risk AI system;

- Provider has provided the technical documentation based on the requirements in the AI Act;

- The system has the CE marking and has the EU declaration of conformity and instructions of use; and

- Provider has appointed an authorized representative;

Notably, if the importer believes that the high-risk AI system does not comply with the standards set in the AI Act, is falsified or is accompanied by falsified documents, then the AI system should not be introduced in the market until it is rectified. The importer also must inform the provider of the AI system, the authorized representative and the market surveillance authorities about the situation.

The importer should also indicate their name, registered trade name or registered trademark and the address on the packaging or documentation.

The importers are also responsible for the storage or transport conditions of the high-risk AI systems.

After the AI system has been placed in the market or put into service, the importers, for 10 years, must keep a copy of the certificate issued by the notified body, the instruction of use and the EU declaration of conformity.

They should also ensure that the technical documentation is made available to the authorities.

Distributors

The obligations of the distributors are:

- To verify that it bears the CE markings and is accompanied with the EU declaration of conformity and instructions for use (provided by the provider and importer of the system).

- If, based on the information it possesses, the distributor finds that the high-risk AI system does not meet the requirements of the AI Act, then it has the power not to make the high-risk AI system available on the market until the requirements are met.

- Be responsible for the storage and transport conditions of the high-risk AI system.

- If a competent relevant authority requests information, the distributor must provide all the information and documentation that is necessary to demonstrate the conformity of the system.

Third-party conformity assessment

Third-party conformity assessment is conducted by the conformity assessment bodies, which includes the assessment of activities such as testing, certification and inspection of medium-risk and high-risk products. This is the responsibility of the provider unless the AI system is used for biometrics. Notified bodies will have to be designated by the national competent authorities to perform the assessments.

An AI system is high risk if it serves as a safety component of a product or is an AI-integrated product. This applies to machinery, toys, recreational craft and personal watercraft, lifts, equipment and protective systems, radio equipment, pressure equipment, cableway, appliances, medical devices. In this case, the safety component must undergo a third-party conformity assessment.

Obligations and requirements for third party management and assessment

Provide all necessary information to provider

If a third party is contracted to supply an AI system, tool, service or component to a provider, then the third party must state the information, capabilities and technical access and other assistance in the written agreement. This is to help the provider of the high-risk AI system to comply with the requirements of the AI Act. However, this obligation does not apply if the third parties make tools, services, processes or components available under a free and open-source license, except for general-purpose AI.

The AI Office can recommend standardized contract terms for high-risk AI system providers and third parties, which can be used on a voluntary basis.

Provide detailed technical documentation

A third party that provides tools or pre-trained systems should specify the methods and steps of how the AI system is used, integrated or modified by the provider.

Follow strict standards when offering certified compliance services

The AI Act allows AI system providers to outsource their data governance requirements to third-party service providers that offer certified compliance services. These services include verification of data governance, data set integrity, data training, validation and testing practices.

Develop appropriate measures for cybersecurity risks

To protect the AI systems from cyberattacks, third-parties that are contracted to high-risk AI providers must develop robust security controls to protect their ICT infrastructure or digital assets.

The EU AI Act represents a groundbreaking step in AI regulation, establishing clear guidelines and responsibilities for everyone involved in the AI ecosystem. As AI continues to evolve at lightning speed, staying compliant with these regulations might seem like navigating through a maze of technical requirements.

This is where hoggo steps in as your trusty AI compliance sidekick! Think of us as your regulatory GPS, helping you navigate the complex landscape of AI governance. Whether you’re a provider, deployer, importer, or distributor, we’ve got your back.

Monica Aguilar

Monica is a legal and business professional with a diverse background in law, media, and corporate governance. Her career began in journalism, where she worked as a radio host, TV and print business journalist, and television producer, before transitioning into commercial law. As a barrister and solicitor in Fiji, she advised on corporate governance, foreign investment, mergers and acquisitions, contract law, and regulatory compliance.

- Monica Aguilar

- Monica Aguilar

- Monica Aguilar