Here's our monthly newsletter for all your privacy, data protection, and AI news.

Make sure to subscribe at the bottom of this article if you want this recap to be delivered directly to your inbox.

Privacy News

- Meta launched ‘Pay for Data’ in Europe – After trying to rely on several legal bases for their targeted ads practices, such as the performance of a contract and legitimate interest, Meta is now launching paid subscriptions to use Facebook and Instagram in Europe. Users can choose between paying €10 or consenting to behavioural advertising with Meta’s “pay or data” approach. While many mistake this approach for “pay for privacy”, Meta will still process and sell personal data to third parties, even if users pay the monthly fee, as it would only set you free from getting targeted ads served.

- The EDPB’s urgent binding decision noted that ‘The Irish DPC is currently evaluating this together with the Concerned Supervisory Authorities (CSAs).’

- Norway’s DPA has already stated it has strong doubt about this proposed solution.

EU lawmakers to discuss AI rulebook’s revised governance structure – The AI Act is a landmark law that regulates artificial intelligence based on its capacity to cause risk. The European Commission drafted a compromise text, including a new proposal on regulating general-purpose AI models.

The AI Office is to monitor and enforce the General Purpose AI (GPAI) models, requesting information, conducting ex-post evaluations, and appointing independent experts. It can also request GPAI model providers to take measures and impose sanctions, and provide coordination support for joint investigations.

The European Artificial Intelligence Board – The compromise bill creates an AI Board with one representative per EU country, renewable once. It is tasked with ensuring consistent application of the AI rulebook across the bloc and advising on secondary legislation, codes of conduct, and technical standards.

Scientific Panel – The panel advises the AI Office on General Purpose AI models, including advising on classifying models with systemic risks. The panel also suggests relevant benchmarks for GPAI models.

- The EDPB provides Guidelines on the applicability of Article 5(3) of the ePrivacy Directive to different technical solutions, such as URL and pixel tracking, local processing, tracking based on IP only, intermittent and mediated IoT reporting, and unique Identifier. Article 5(3) of the ePrivacy Directive is most commonly known for establishing the cookie notice and cookie consent requirements in the EU. However, Article 5(3) of the ePrivacy Directive covers more than just cookies.

- The EDPB stated that “information includes both non-personal and personal data, regardless of the method of storing and accessing these data.” According to this, Article 5(3) applies regardless of whether cookies or similar technologies are used to store or access information on someone’s terminal equipment.

- It is argued by the EDPB that the ePrivacy Directive may apply to devices used by multiple users, and that terminal equipment can be made up of a number of individual pieces of hardware that together form a terminal. Smartphones, laptops, connected cars, connected TVs, and smart glasses are examples of this.

- In addition, the EDPB clarifies that Article 5(3) does not only apply to cookies, but also to tracking pixels, tracking links, other device fingerprinting techniques, certain types of local processing that transfer information outside of the user’s device, IoT reporting, and certain instances of IP tracking.

Regulatory Landscape

The US Senate introduces a bill on artificial intelligence research, innovation, and accountability – The bill defines artificial intelligence systems as engineered systems that generate outputs, such as content, prediction, recommendations, or decisions for a given set of objectives using machine and human-based inputs.

The bill requires the Undersecretary of Commerce for Standards and Technology to carry out research on authenticating content generated by human authors and artificial intelligence.

Secure and binding methods are needed to append a statement of provenance to content, to ensure authenticity, to display clear and conspicuous statements of content provenance to the end user, and to ensure attribution for content creators.

The bill requires that individuals operating covered internet platforms that use generative AI systems provide notice to each user that the platform uses generative AI to generate content the user sees.

High-impact AI systems are subject to transparency restrictions, and must submit a report annually. The bill also notes the required content of each transparency report.

More AI News - It’s All About AI These Days…

- Global guidelines for AI security (November 27)- The UK, US and 15 other countries announced that they’ve agrees on global guidelines for AI security. The guidelines are recommended to any systems that use AI, whether they have been created from scratch or built on top of tools and services provided by others”. This also means tools built with ChatGPT’s API or HuggingFace open-source models.

According to the guidelines, there are 4 key areas in the developments in AI systems life cycle: - Secure design– This section contains guidelines that apply to the design stage of the AI system development life cycle. This includes understanding risks and threat modelling, staff awareness of threats and risks, map threats to the systems, design for security alongside performance, consider security benefits and tradeoffs when selecting an AI model

- Secure development – This section contains guidelines that apply to the development stage of the AI system development life cycle. This includes securing the supply chain, identifying, tracking and protecting assets, documenting data, models and prompts, and managing the technical debts.

- Secure deployment – This section contains guidelines that apply to the deployment stage of the AI system development life cycle. This includes securing the infrastructure, ongoing protection of the model, developing an IRP, and releasing and managing AI responsibly.

- The UK House of Lords has introduced draft AI legislation. Some key items from the Artificial Intelligence (Regulation) Bill are:

- An AI Authority should be established to ensure alignment with other “relevant” regulators, monitor AI regulatory frameworks, etc;

- The AI Authority shall takes into account the need for businesses developing, deploying or using AI to be transparent; to test AI thoroughly and transparently; and to comply with applicable laws, including those relating to data protection, privacy, and intellectual property;

- The bill promotes inclusivity by design and avoid discrimination;

- Businesses should be mandated (through SoS regulations) to appoint AI Officers to supervise safe, ethical, unbiased, and nondiscriminatory AI use;

- Require that those who train AI provide all third-party data and IP used in training to the AI Authority to ensure that it is only used with informed consent (including “opt-out).

- The California Bar adopts a first-of-its-kind AI guidance for attorneys. Among the guidance notes are: Lawyers must not charge hourly fees for the time they save by using generative AI, they must understand how the technology works, they must engage in continuous learning about artificial intelligence biases, they must ensure competent use of the technology, they must apply diligence and prudence to facts and laws, and they must ensure accuracy of all generative AI outputs.

- The French Data Protection Authority, CNIL, has published guidelines on the use of AI, along with an action plan for data controllers and processors who process personal data using AI. CNIL offers resources to help data controllers and processors use AI to process personal data without violating the GDPR.

Where’s the fun without some Fines & Enforcement?

- The ICO fines three companies for illegal direct marketing. The Information Commissioner’s Office (ICO) has fined three financial services companies £170,000 for illegal direct marketing under the Privacy and Electronic Communications Regulations (PECR).

- AEPD fines Eurocollege Oxford English €90,000 for data protection violations and QUALITY-PROVIDER S.L for processing personal data without a legal basis and not collaborating with the DPA €20,000.

- CNIL issues 10 penalties over employee monitoring practices, totaling 97,000 euros. – Complaints concerned the processing of geolocation data and the use of video surveillance of employees at their workstations while they were on breaks.

- Austria: NOYB files complaint against EU Commission for unlawful political microtargeting. According to the complaint, The Commission’s targeted advertising campaign on Twitter violated European data protection law by using sensitive data, such as political views and religious beliefs, to target users.

Take a Note

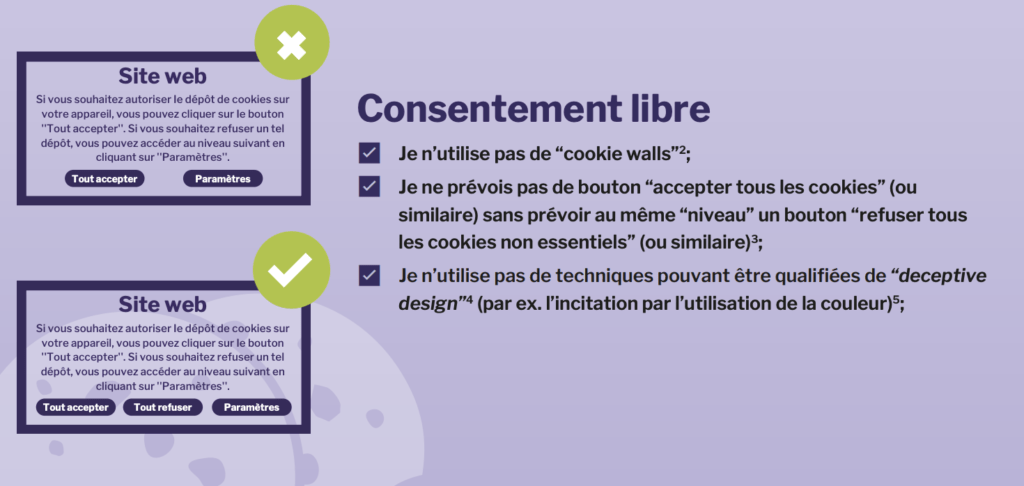

- The Belgium DPA published a cookie checklist, advising to use “Reject All” alongside “Accept All” cookies.

- Israel: PPA publishes guidance on IoT and smart homes, including recommendations and best practices for smart home Internet of Things development.

By subscribing below, you can receive this newsletter monthly to your inbox.

Noa Kahalon

Noa is a certified CIPM, CIPP/E, and a Fellow of Information Privacy (FIP) from the IAPP. Her background consists of marketing, project management, operations, and law. She is the co-founder and COO of hoggo, an AI-driven SaaS platform for B2B trust where sellers can showcase & improve compliance and buyers can evaluate, manage and monitor them.

Samuel Solberg

Samuel is an experienced privacy consultant who holds CIPM, CIPP/E, and FIP certifications from the IAPP, as well as an L.L.M. He is the co-founder and CEO of hoggo, a privacy tech startup that aims to eliminate privacy concerns for businesses.